|

Getting your Trinity Audio player ready... |

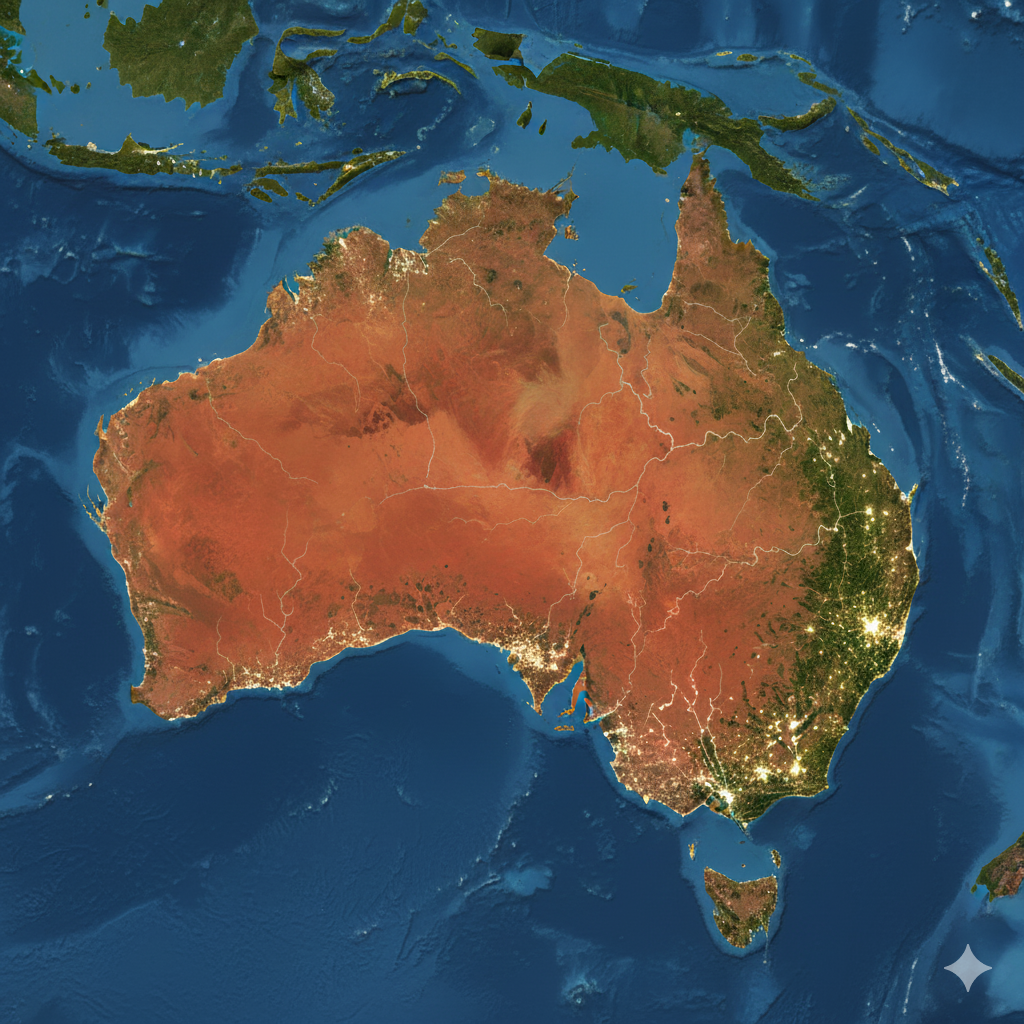

Australia has taken a significant step toward responsible technology governance by announcing a new set of AI Safety Regulations aimed at increasing transparency, strengthening user protection, and ensuring ethical deployment of artificial intelligence across the country. The move follows rising global concerns about AI misuse, algorithmic discrimination, deepfakes, and the lack of accountability among major tech platforms.

The regulations developed by Australia’s Department of Industry, Science and Resources are expected to become one of the most comprehensive AI safety frameworks in the Asia-Pacific region. They target large technology companies operating in Australia, including Microsoft, Google, Meta, Amazon, and emerging AI platforms adopting advanced machine learning tools.

Mandatory Transparency & Safety Disclosures

Under the new framework, tech companies will be required to disclose how their AI systems operate, what datasets they use, and how they prevent bias. These disclosures must be submitted regularly to the Australian Communications and Media Authority (ACMA).

Key requirements include:

- Risk assessments for high-impact AI systems

- Detailed explanations of how training data is sourced

- Proof of bias mitigation strategies

- User warning labels for AI-generated content

- Full documentation for AI systems used in recruitment, finance, and healthcare

This push for transparency aims to prevent harmful outcomes, such as discrimination in job applications or misinformation spread through algorithmic recommendations.

Regulating Deepfakes and AI-Generated Misleading Content

One of the most urgent concerns addressed by the regulations is the rise of deepfakes and manipulated media. Australia has witnessed increasing political misinformation and identity misuse, prompting the government to introduce strict rules on synthetic content.

Platforms must now:

- Flag AI-generated videos, images, and audio

- Detect manipulated political content

- Automatically remove harmful or fraudulent deepfakes

- Provide users with tools to verify authenticity

Violations can lead to heavy penalties, including fines up to AU$10 million depending on the severity of the breach.

Consumer Protection Measures Strengthened

To ensure users remain safe while interacting with AI-driven platforms, the new regulations enhance consumer rights through:

- AI incident reporting systems

- Mandatory user consent for biometric data usage

- Clear opt-out options for AI personalization

- Protection for minors against AI-driven tracking and profiling

Children and teenagers who make up one of the most digitally active groups in Australia are a major focus, with platforms required to disable certain AI features for underage users by default.

Impact on Businesses and Developers

While the regulations primarily target big tech, Australian businesses using AI tools will also need to follow certain compliance steps. These include conducting standard risk assessments, providing transparency to customers, and ensuring that AI-powered decisions can be reviewed by humans.

Sectors expected to experience major shifts include:

- Healthcare: Stricter monitoring of diagnostic AI tools

- Banking & Finance: Transparency in credit scoring algorithms

- Education: Responsible AI use in evaluation and proctoring systems

- Recruitment: Mandatory human oversight for AI-screened job applications

Tech companies offering AI products in Australia will be given a 12–18 month transition period to redesign systems and comply.

Australia Positions Itself as a Global AI Governance Leader

With countries like the EU, UK, and Canada already moving forward with AI legislation, Australia’s new regulations align with international trends while adding unique local protection measures. Industry experts believe the framework will boost trust in AI adoption, strengthen cybersecurity, and promote innovation in safe, ethical ways.

Government officials emphasized that the goal is not to restrict AI development but to ensure it grows responsibly. The country will continue to invest in AI education, research grants, and domestic innovation while prioritizing user safety and transparency.

As AI continues to evolve rapidly, Australia’s proactive stance sets the foundation for a future where technology benefits society without compromising ethics or safety.