|

Getting your Trinity Audio player ready... |

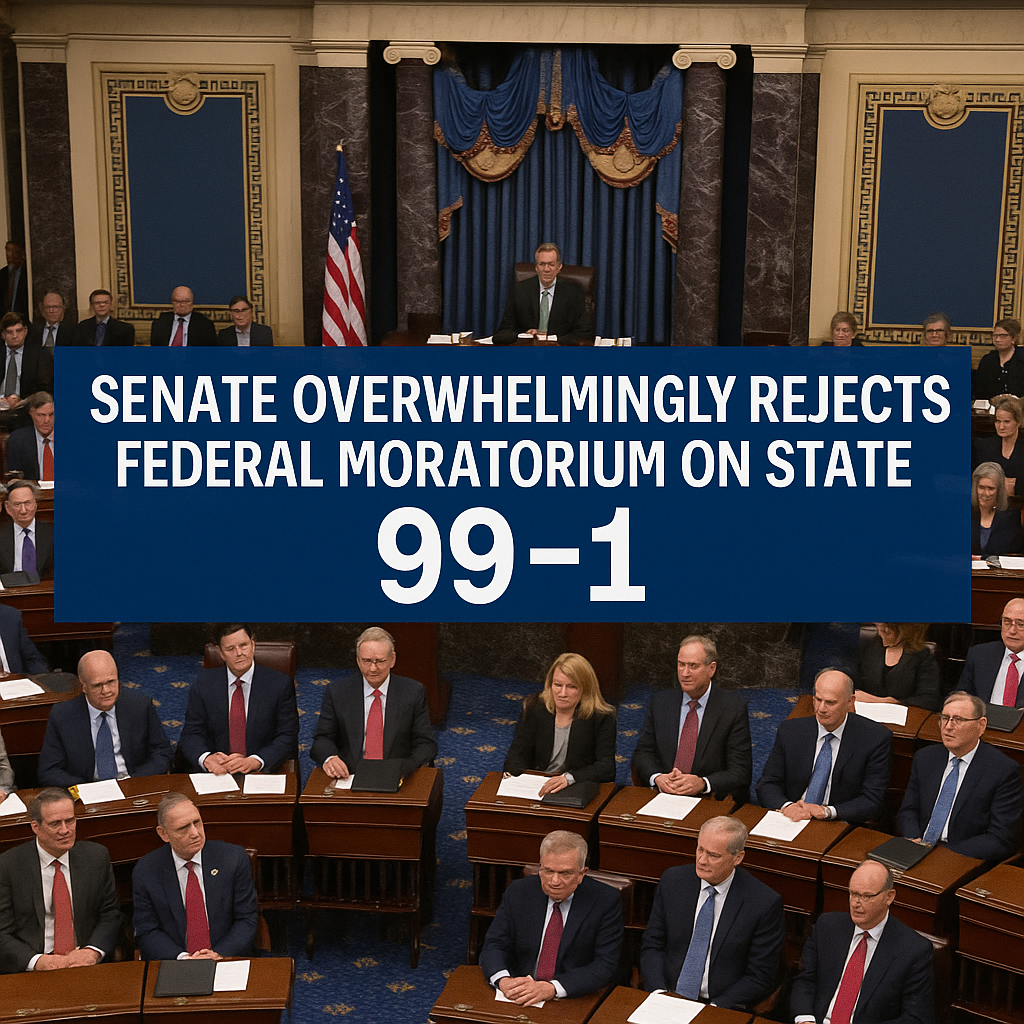

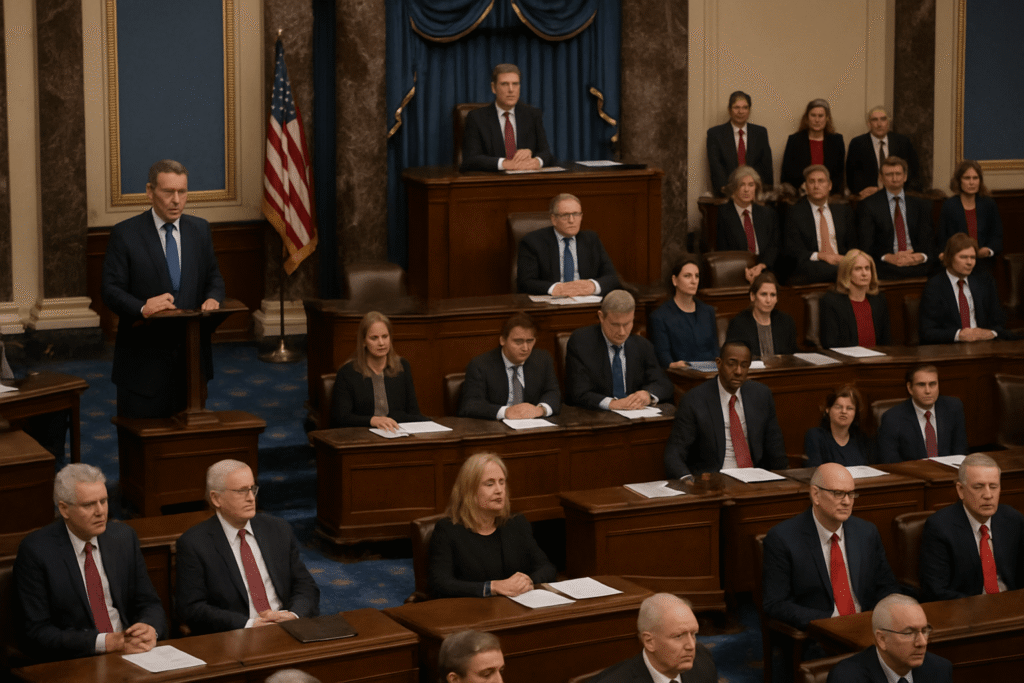

In a powerful display of bipartisan unity, the U.S. Senate voted 99–1 on Tuesday to strike down a proposed federal moratorium that would have blocked states from enacting or enforcing AI Regulation in the US. The rejected provision had been included in President Trump’s sweeping “One Big Beautiful Bill,” a tax and spending package aimed at reshaping the nation’s digital and economic infrastructure.

The Senate’s move sends a clear message: states will retain their authority to regulate artificial intelligence—a rapidly evolving technology that lawmakers, families, and industry leaders agree must be handled with urgent care.

States Push Back Against Federal Preemption

The debate’s central issue was control: should the federal government or individual states have more influence over how AI is regulated in the US going forward?

The moratorium, originally pitched as a 10-year pause on state-level AI laws (later amended to five years), would have required states to relinquish their regulatory powers in exchange for access to federal AI infrastructure funding. That tradeoff drew fierce backlash from across the political spectrum.

Eighteen governors, including Republican leaders like Arkansas Gov. Sarah Huckabee Sanders, and dozens of attorneys general condemned the proposal, warning it would undermine local protections already in place. “States are not waiting for Washington to catch up,” said Sen. Alongside Sen., Marsha Blackburn (R-Tenn.) spearheaded the amendment to eliminate the clause.

Blackburn added, “States are leading efforts to shield children from digital exploitation and to defend artists from AI abuse. Taking that away in exchange for funding is a step too far.”

Only One Senator Opposed the Repeal

In a near-unanimous vote, Sen. Thom Tillis (R-N.C.) stood alone in opposing the amendment. Tillis argued that a temporary pause could have provided clarity for businesses navigating a complex regulatory landscape. But his lone dissent was drowned out by a rare and resounding bipartisan agreement.

Public Safety and AI Accountability at the Forefront

Advocacy groups, digital safety coalitions, and even grieving families rallied in support of preserving state oversight. Their concern: without local regulation, AI technologies could lead to irreversible harm, from deepfake scams and voice cloning to biased algorithms affecting employment, healthcare, and law enforcement.

Some parents testified about AI-generated child abuse imagery circulating online, calling for urgent legislative action—not delay. Others highlighted real-life impacts of AI-driven decisions that disproportionately harmed vulnerable communities.

The Senate responded not just to political pressure—but to a growing moral imperative to keep protections intact while federal standards remain unfinished.

Big Tech’s Influence Sparks Debate

While the tech industry lobbied in favor of the moratorium, claiming it would simplify compliance and enhance America’s edge in AI innovation, critics weren’t convinced.

OpenAI CEO Sam Altman argued that streamlined rules would strengthen U.S. competitiveness against nations like China. But opponents labeled the proposal a “regulatory holiday” for Big Tech—one that would let companies police themselves in the absence of government guardrails.

“Giving Silicon Valley a free pass right now would be a dangerous precedent,” said Sen. Cantwell. “This is not the time for less accountability.”

What Happens Now?

With the moratorium decisively rejected, AI regulation in the US will remain a state-driven effort—at least for now. Twenty-eight states, along with the District of Columbia and several U.S. territories, are already advancing legislation targeting deepfakes, algorithmic discrimination, biometric data use, and job automation.

However, this decentralized approach means companies may soon face a complex patchwork of AI laws, varying wildly from state to state. While some lawmakers believe that pressure will eventually force Congress to create a unified framework, others argue that local innovation can lead to better protections and faster responses.

Sen. Blackburn concluded, “Until we pass comprehensive federal AI legislation—including the Kids Online Safety Act—we must trust states to keep doing the hard work.”